Abstract

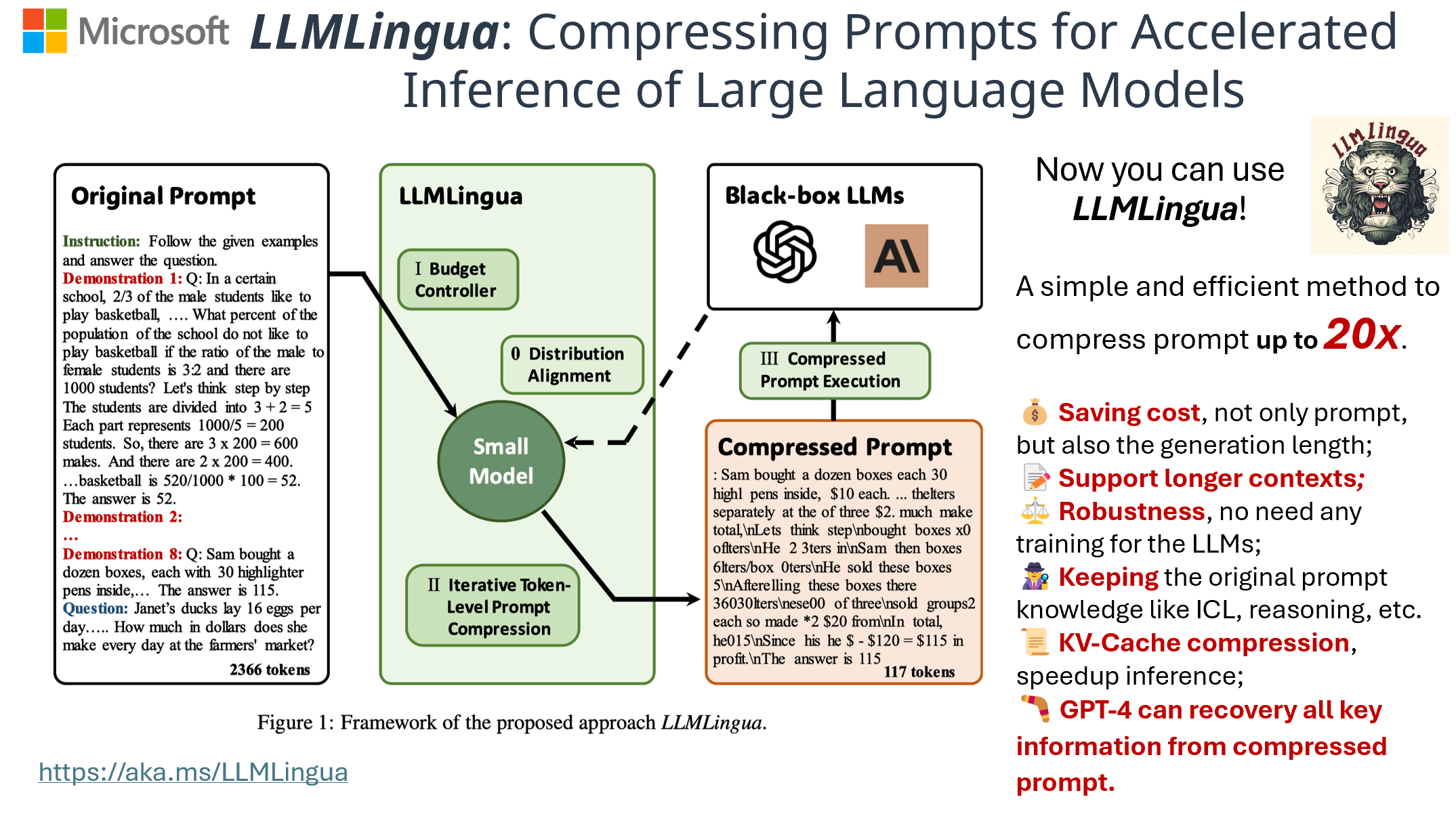

Large language models have been applied in various applications due to their astonishing capabilities. With advancements in technologies such as chain-of-thought (CoT) prompting and in-context learning (ICL), the prompts fed to LLMs are becoming increasingly lengthy, even exceeding tens of thousands of tokens. To accelerate model inference and reduce cost, this paper presents LLMLingua, a coarse-to-fine prompt compression method that involves a budget controller to maintain semantic integrity under high compression ratios, a token-level iterative compression algorithm to better model the interdependence between compressed contents, and an instruction tuning based method for distribution alignment between language models. We conduct experiments and analysis over four datasets from different scenarios, i.e., GSM8K, BBH, ShareGPT, and Arxiv-March23; showing that the proposed approach yields state-of-the-art performance and allows for up to 20x compression with little performance loss.

Insights

Natural language is redundant, amount of information varies.

LLMs can understand compressed prompt.

There is a trade-off between language completeness and compression ratio. (LLMLingua)

GPT-4 can recover all the key information from a compressed prompt-emergent ability. (LLMLingua)

For more details, please refer to the paper LLMLingua.

Why LLMLingua?

Building on the intuition mentioned earlier, LLMLingua leverages small models' perplexity to measure the redundancy within a prompt. It has designed three modules, as illustrated above, to assign varying compression rates to different segments within the prompt. This approach takes into account the conditional probabilities between compressed tokens and other tokens to better establish a sensitive distribution. Moreover, to make small models more attuned to various black-box models, LLMLingua introduces an alignment mechanism that aligns small models more closely with the semantic distributions of LLMs.

LLMLingua offers the following advantages:

- It can be directly used for black-box LLMs and helps save computation and financial costs, up to 20x.

- It is a highly robust method that requires no training of the LLMs and is applicable to different LLMs, such as GPT-4, GPT-3.5-Turbo, Claude, Mistral, etc.

- After compression, it allows the model to support longer context inputs.

- LLMLingua effectively retains the capabilities of LLMs, including reasoning, in-context learning, etc.

- LLMLingua can also be used for KV Cache compression, improving decoding speed.

- Prompts compressed by LLMLingua can be effectively decompressed by GPT-4, retaining vital information.

Experiments Results in Reasoning and ICL scenarios

We tested LLMLingua in various scenarios, including reasoning, in-context learning (ICL), summarization, and dialogue. The results in the GSM8K and Big-bench Hard Benchmark are listed below. Notably, within the GSM8K, LLMLingua was able to retain the reasoning capabilities of LLMs at a 20x compression ratio, with only a 1.5% loss in performance. For more detailed experimental results, please refer to the paper.

| Methods | GSM8K | BBH | ||||

|---|---|---|---|---|---|---|

| EM | Tokens | 1/𝜏 | EM | Tokens | 1/𝜏 | |

| Full-shot | 78.85 | 2,366 | - | 70.07 | 774 | - |

| Sentence Selection | 66.67 | 195 | 12x | 46.00 | 109 | 7x |

| Selective-Context | 44.20 | 157 | 15x | 47.37 | 108 | 7x |

| GPT4-Generation | 56.33 | 188 | 20x | 26.81 | 101 | 8x |

| LLMLingua | 77.33 | 117 | 20x | 56.85 | 110 | 7x |

| Zero-shot | 48.75 | 11 | 215x | 32.32 | 16 | 48x |

| Simple Prompt | 74.9 | 691 | 3x | - | - | - |

Latency Results

We also evaluated LLMLingua's performance in terms of latency. Specifically, our tests were conducted on a V100-32GB machine, with prompts sourced from GSM8K, approximately 2.3k in length. The terms "End-to-End w/ and w/o" refer to the total latency time, including the request process to the black-box LLMs, while "LLMLingua" refers to the time taken for compression. Our findings indicate that our method can achieve a practical acceleration of between 1.7x and 5.7x.

| 1/𝜏 | 1x | 2x | 5x | 10x |

|---|---|---|---|---|

| End-to-End w/o LLMLingua | 8.6 | - | - | - |

| End-to-End w/ LLMLingua | - | 4.9 (1.7x) | 2.3 (3.3x) | 1.3 (5.7x) |

| LLMLingua | - | 0.8 | 0.3 | 0.2 |

Conclusion

We introduce a coarse-to-fine algorithm for prompt compression, named LLMLingua, which is based on the small LM’s PPL for black-box LLMs. Our approach consists of three modules: Budget Controller, Iterative Token-level Compression, and Alignment. We validate the effectiveness of our approach on 4 datasets from different domains, i.e., GSM8K, BBH, ShareGPT, and Arxiv-March23, demonstrating that our method achieves state-of-the-art performance across all datasets, with up to 20x compression with only a 1.5 point performance drop. Moreover, we observe that LLMs can effectively restore compressed prompts, and prompt compression contributes to a reduction in generated text length. Our approach holds substantial practical implications, as it not only reduces computational costs but also offers a potential solution for accommodating longer contexts in LLMs. The method of compressing prompts has the potential to enhance downstream task performance by compressing longer prompts and to improve the LLMs’s inference efficiency by compressing the KV cache.

BibTeX

If you find this repo helpful, please cite the following papers:

@inproceedings{jiang-etal-2023-llmlingua,

title = "{LLML}ingua: Compressing Prompts for Accelerated Inference of Large Language Models",

author = "Huiqiang Jiang and Qianhui Wu and Chin-Yew Lin and Yuqing Yang and Lili Qiu",

editor = "Bouamor, Houda and

Pino, Juan and

Bali, Kalika",

booktitle = "Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing",

month = dec,

year = "2023",

address = "Singapore",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2023.emnlp-main.825",

doi = "10.18653/v1/2023.emnlp-main.825",

pages = "13358--13376",

}